Data.BioTracer

HUGIN/IFR BIOTRACER Demonstration Activity

Data Input From CSV File With Labelled Data

In this tutorial we learn the structure and parameters for a network of discrete variables.

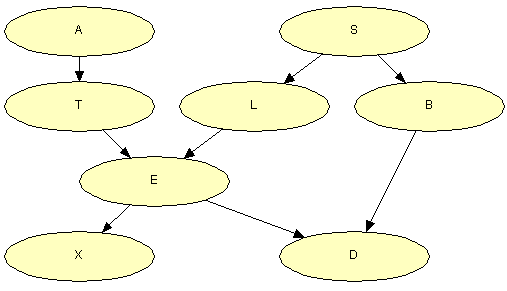

We use a data file generated from the well known Asia example, see Figure 1.

Figure 1: Asia network - the structure from which the data were sampled

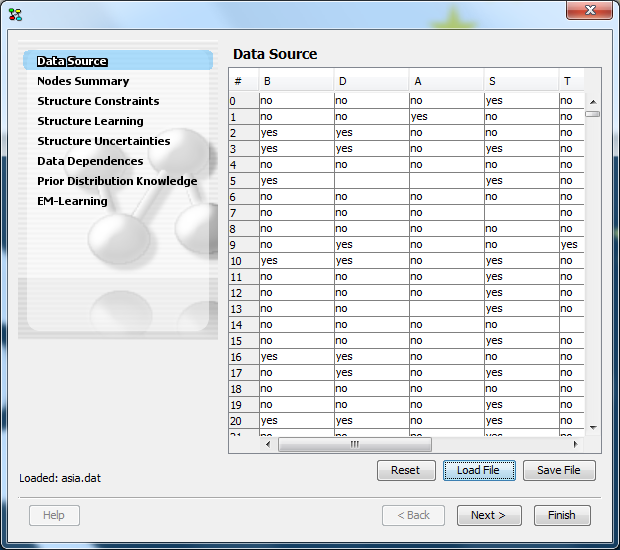

The data file consists of 10000 cases asia.dat. A sample can be seen in Figure 2.

| B,D,A,S,T,L,E,X "yes","yes","no","yes","no","no","no","no" "yes","yes","no","no","no",,"no","no" "no","no","no","no","no","no","no","no" "no","no","no","no","no","no","no","no" "yes","yes","no","no","no","no","no","no" "no",,"no","no","no","no","no","no" "no","yes","no","yes","no","no","no","no" "no","no","no","yes","no","no","no","no" |

Figure 2: Sample from asia.dat

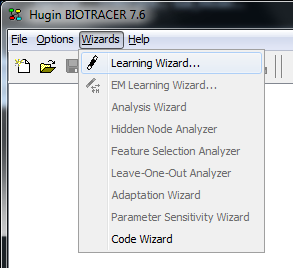

Open the learning wizard, click Wizards -> Learning Wizard (Figure 3).

Figure 3: Finding the learning wizard

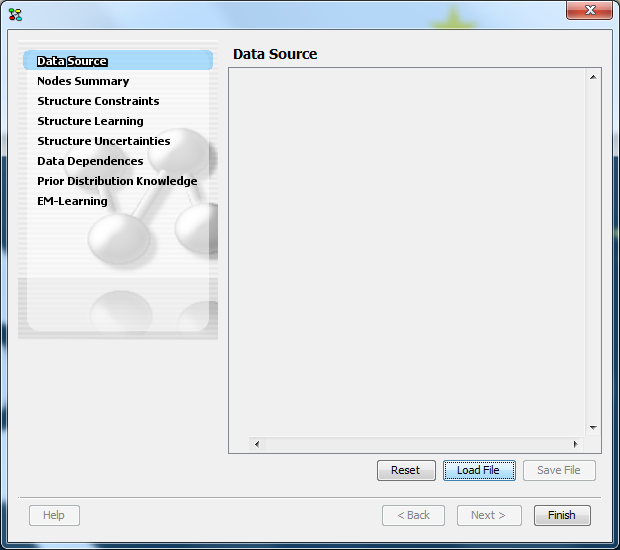

The learning wizard should appear (Figure 4).

Figure 4: Learning wizard - no data file has been loaded

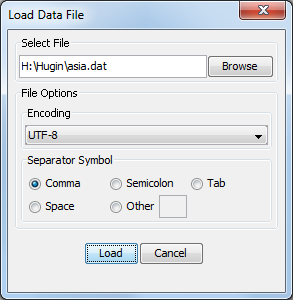

To load the data file click the Load button, and load the file using the loading dialog that appear (Figure 5).

Figure 5: Loading a file

The data file has been loaded and the values can be inspected (Figure 6).

Figure 6: Inspection of data items

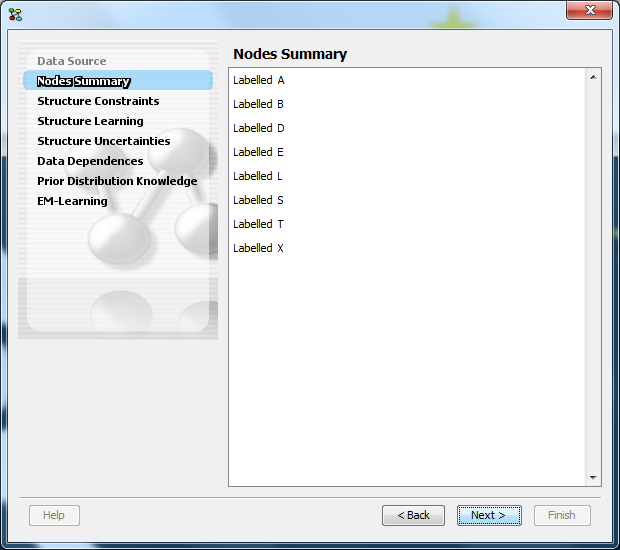

To continue structure learning, click the Next button. A summary of the nodes induced from the data set appear (Figure 7).

Figure 7: Summary of nodes

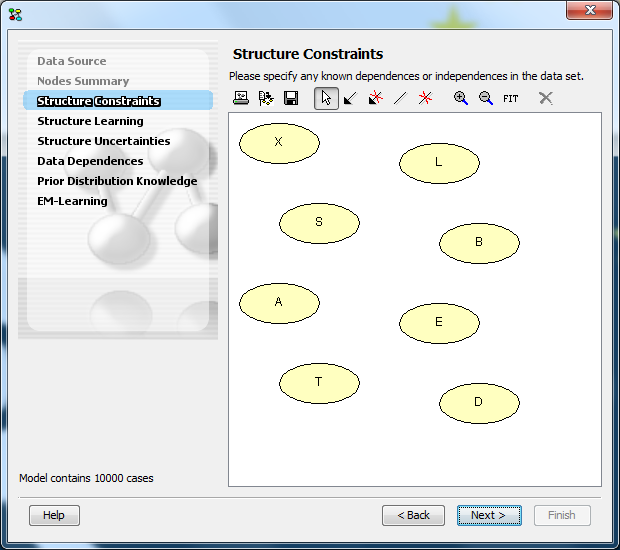

Click Next button, to get to the structure constrains (Figure 8).

Figure 8: Structure constraints

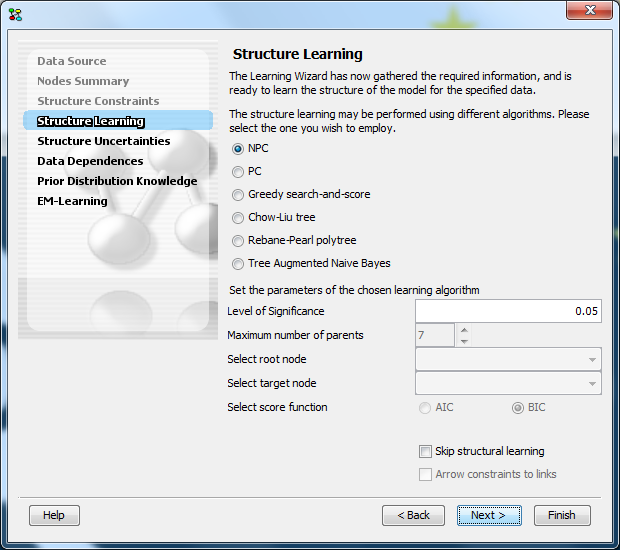

Here we can specify any constraints, just click the Next button and select the NPC learning algorithm (Figure 9) and click the Next button.

Figure 9: Select learning algorithm

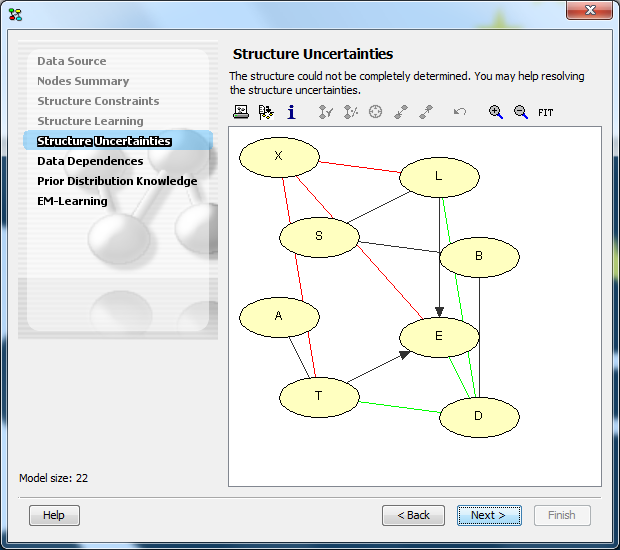

Next we have the option to resolve any structure uncertainties (Figure 10), just click the Next button.

Figure 10: Structure uncertainties

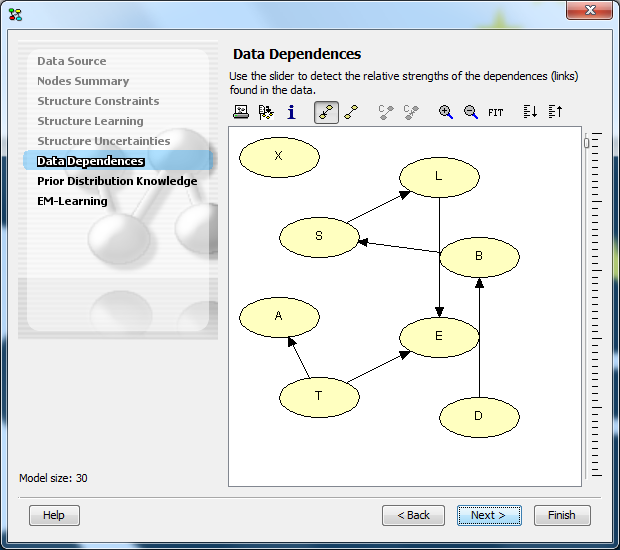

Next we can inspect the strength of the data dependences found in the data (Figure 11), the dialog also show the learned structure.

Obviously, the structure of the original network has not been recalled perfectly. The problem is that variable E depends deterministically on variables T and L through a logical OR (E stands for Tuberculosis or Lung cancer). This means that (i) T and X are conditionally independent given E, (ii) L and X are conditionally independent given E, and (iii) E and X are conditionally independent given L and T. Depending on the choices made by the user, many different final structures (including the original structure shown in Figure 1) can be generated from this intermediate structure by adjusting the structure uncertainties.

Figure 11: Inspect data dependences

Use the slider to adjust the threshold of displayed links. Switch between displaying only the learned links, or dependences between all nodes usint buttons  .

.

Click the Next button to continue to EM learning.

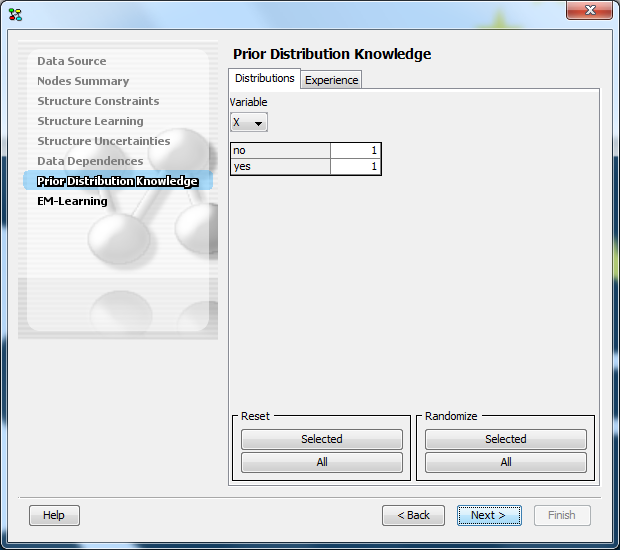

We have the option of specifying any prior distribution knowledge (Figure 12).

Figure 12: Specify prior distribution knowledge

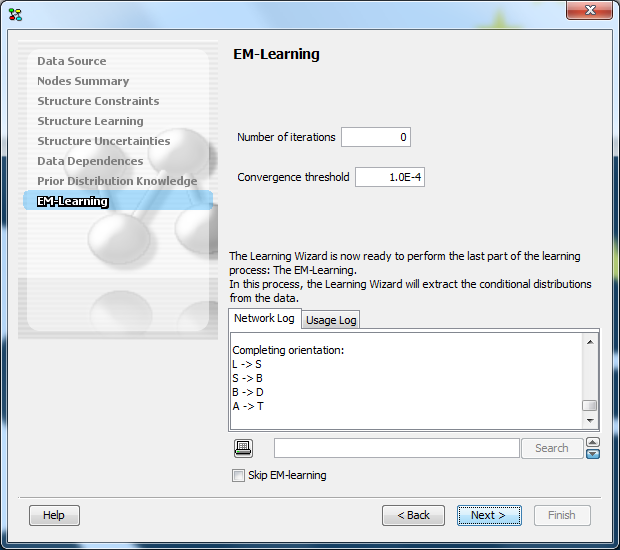

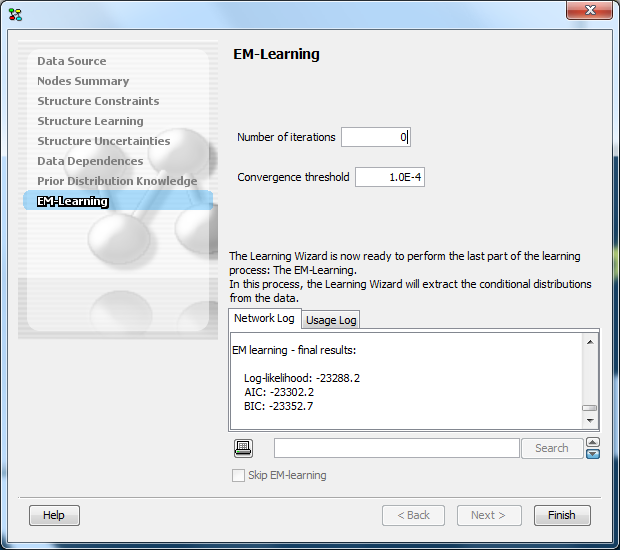

Click the Next button to get to EM learning (Figure 13).

Figure 13: EM Learning - ready to begin learning.

To perform EM learning, click the Next button, a summary of the EM learning is presented (Figure 14).

Figure 14: EM summary

Click the Finish button to close the wizard.

HUGIN/IFR BIOTRACER

Demonstration Activity

"A data input tool"